Smart Mirror

In today’s high paced, ever-changing world it can be incredibly hard and overwhelming to keep up with the number of tasks that we have. This is especially true for the neurodivergent, and those experiencing mental illness or disability. It can be a battle just to start the day, and then you are faced with having to take care of yourself, plan your day and potentially take medication.

This project, the Smart Mirror is designed to be a guiding hand for those struggling with starting or finishing their days.

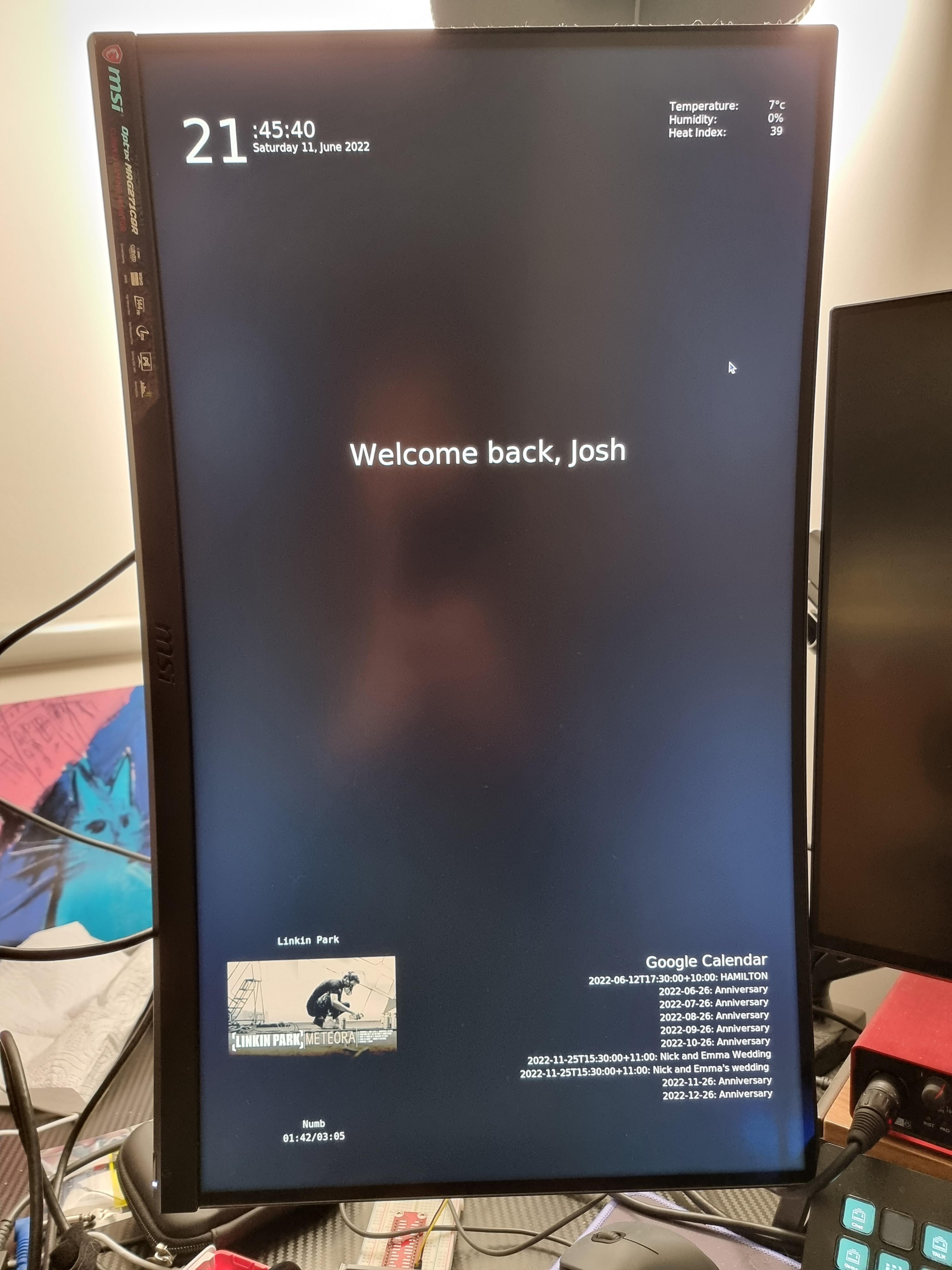

A “smart mirror” is a one-way mirror with a display behind it. When the display lights up, the light can pass through the mirror, allowing the display to be seen. By leaving most of the display black, only the desired elements show through clearly.

The smart mirror displays all sorts of useful information, the time and date, weather, currently playing music and, importantly, your calendar and schedule. It is also very privacy focused with sensitive information only being shown when the users face is recognised.

This tutorial will be a guide on how to create your own magic mirror from scratch in python, making use of some of the many fantastic free and open-source libraries available. It will not be a guide on how to make the one-way mirror and serves more as a proof of concept. However, it is trivial to find a frame that fits your display and apply the necessary film to it to turn it into a one-way mirror (my film is currently on its way from Amazon, nearly a month behind schedule!).

This project will teach you how to program the smart mirror from scratch in python, rather than using a web-based interface like MagicMirror2. As such, it will require an intermediate level of programming experience.

Supplies

Required Materials:

- Raspberry Pi 3 B+ or higher (preferably a model 4 for increased CPU power

- USB Webcam, Raspberry Pi Camera Module or Raspberry Pi High-Quality Camera

- Micro SD Card

- Power Supply (make sure it can supply enough voltage for the Raspberry Pi to run at full speed, check your specific model for details)

- Monitor

- HDMI Display

- Mouse and Keyboard (for setup)

If using a Particle Argon for local measurements:

- Particle Argon

- Breadboard and jumper wires

- DHT22/AM2302 Temperature/Humidity Sensor

Python Libraries Used

- dlib==19.24.0

- face-recognition==1.3.0

- google-api-core==2.8.1

- google-api-python-client==2.50.0

- google-auth==2.7.0

- google-auth-httplib2==0.1.0

- google-auth-oauthlib==0.5.2

- googleapis-common-protos==1.56.2

- imutils==0.5.4

- oauthlib==3.2.0

- opencv-python==4.6.0.66

- Pillow==9.1.1

- requests==2.28.0

- spotipy==2.19.0

Setting Up the Environment

Our magic mirror will attempt to make use of the SOLID design principles where possible, ensuring we can easily swap out the implementations of each widget should we wish to, for example, change our smart mirror from a Spotify client to Apple Music.

The first step is to write the code for the basic interface, creating dummy interfaces as placeholders while we make sure everything is looking and acting correctly.

It's very important to start with a clean working directory. When working with Python, it is always a good idea to make use of a virtual environment. This will let you work with specific versions of packages and keep all of your projects separate, leading to less chance of unnecessary packages bloating your project.

In our terminal, we create a new environment as follows.

python3 -m venv /path/to/new/virtual/environment

And activate the environment with:

$ source <venv>/bin/activate

Once we are in our virtual environment, we can begin installing our packages.

Follow this link for a guide on how to create a new environment for your specific platform.

Making the Interface With TkInter

With our packages installed, we begin constructing the SmartMirror class. Starting by importing the packages that we will be using.

Packages

# import all the necessary packages import datetime import time import requests from widgets import player, google_calendar, weather, identity from tkinter import Label, Tk from PIL import ImageTk, Image from io import BytesIO

Note the following line:

from widgets import player, google_calendar, weather, identity

These aren't packages that we can import with PIP! We will be implementing all of these from scratch!

We will also set several constants. These will be used throughout the program, meaning we can change them once here and see our changes reflect throughout the program.

Constants

CALENDAR_EVENTS = 10 # number of calendar events to display WEATHER_INTERVAL = 900 # time between refreshing the weather widget in seconds IDENTITY_CHECK_INTERVAL = 15 # time between checking identity in seconds, increase to reduce overhead from facial recognition # sets the base text size BASE_TEXT_SIZE= 4

Base Class

We will be passing in objects for all the functionality of the widgets. Each type of widget (i.e. AbstractPlayerInterface) will have the same methods as every other widget of the same type. We can pass in any object that inherits from the AbstractPlayerInterface class. When we're prototyping the interface, we will make use of a DummyClient class which will pass the same song information every time, without us having to worry about setting up our Spotify developer account. Later on, we can pass through our SpotifyClient class without having to change anything else in our SmartMirror class.

class SmartMirror:

def __init__(self,

master,

user: str,

identifier: identity.AbstractIdentifier,

player_client: player.AbstractPlayerInterface,

weather_interface: weather.AbstractWeatherInterface):

self.master = master

# set title, and make fullscreen with a black background which will not show through the mirror

self.master.title('Smart Mirror')

self.master.attributes("-fullscreen", True)

self.master.configure(background='black')

self.master.bind("<Escape>", lambda event:self.close())

# set user

self.user = user

# set up indentifier (Dummy or real facial recognition)

self.identifier = identifier

# Weather Widget Interface

self.weather_interface = weather_interface

# player client i.e. Spotify or dummy interface

self.player_client = player_client

self.setup()

Handling Closing

This method will be called whenever the application is closed by pressing the escape key. It takes care of releasing any resources taken up by the application.

def close(self):

print("Closing safely")

# GPIO.cleanup()

self.master.destroy()

Setting up the grid

While we've created our base class, it still can't do anything. We need to set up the grid that will make up the interface of our mirror. We're going to create the self.setup() we called earlier.

def setup(self): self.grid_setup() self.init_system_clock() self.init_spotify_widget() self.init_google_calendar() self.init_weather() self.init_greeting() self.refresh()

This method will instantiate the grid as well as initialise each of the widgets we will be implementing.

The actual grid setup is fairly straightforward. We make use of the variables padx and pady so that we can change just one variable to affect all the sections.

The grid is divided up into 5 sections, top left, top right, middle, bottom left and bottom right. Each section will be home to a widget.

def grid_setup(self): padx = 50 pady = 75 self.top_left = Label(self.master, bg='black', width=30) self.top_left.grid(row=0,column=0, sticky = "NW", padx=(padx,0), pady=(pady,0)) self.top_right = Label(self.master, bg='black', width=30) self.top_right.grid(row=0,column=1, sticky = "NE", padx=(0,padx), pady=(pady,0)) self.middle_middle = Label(self.master, bg='black', width=30) self.middle_middle.grid(row=1,column=0, sticky = "N",columnspan=2) self.bottom_left = Label(self.master, bg='black', width=30) self.bottom_left.grid(row=2,column=0, sticky = "SW", padx=(padx,0), pady=(0,pady)) self.bottom_right = Label(self.master, bg='black', width=30) self.bottom_right.grid(row=2,column=1, sticky = "SE", padx=(0,padx), pady=(0,pady+50)) self.master.grid_columnconfigure(0, weight=1) self.master.grid_columnconfigure(1, weight=1) self.master.grid_rowconfigure(0, weight=1) self.master.grid_rowconfigure(1, weight=1) self.master.grid_rowconfigure(2, weight=1)

With this done, we've created the skeleton for our interface, but we still don't have any widgets. While we're here though, lets write the code that will update them. Each widget is going to have a refresh method. We call these early on in the code and each method will take care of re-calling itself after a certain period. Ideally, we would make use of multithreading here so that each widget could work independently. Unfortunately, tkInter doesn't play well with multi-threading out of the box as the GUI must be executed in the main thread. It would definitely be possible to improve performance if we rewrote the code to include multi threading though. Perhaps in another tutorial!

Refresh Methods

def refresh(self): self.refresh_clock() self.refresh_spotify() self.refresh_google_calendar() self.refresh_weather() self.refresh_greeting()

Clock Widget

This is the easiest widget to implement as we don't have to worry about interfacing with any other services. First we just need to create a tkInter label for each of the elements of the clock. We have a separate label for hours, minutes/seconds and the date.

Initialising widget

def init_system_clock(self):

self.clock_hour = Label(self.master,

font = ('Bebas Neue', BASE_TEXT_SIZE*16),

bg='black',

fg='white')

self.clock_hour.grid(in_=self.top_left,

row=0,

column=0,

rowspan = 2,

sticky="W")

self.clock_min_sec = Label(self.master,

font = ('Bebas Neue', BASE_TEXT_SIZE*6),

bg='black',

fg='white')

self.clock_min_sec.grid(in_=self.top_left,

row=0,

column=1,

columnspan = 3,

sticky="W")

self.date_frame = Label(self.master,

font = ('Bebas Neue', BASE_TEXT_SIZE*3),

bg='black',

fg='white')

self.date_frame.grid(in_=self.top_left,

row=0,

column=1,

columnspan = 3,

sticky="S")

To update the widget, we will make use of one of the the refresh methods we called earlier. refresh_clock().

def refresh_clock(self): self.system_hour() self.system_min_sec() self.system_date()

All this method does is call the individual methods for each element of the clock. These methods will fetch the relevant time information and format it correctly for each frame inside the widget. The after method tells the frame to re-call the method after a certain interval in milliseconds.

Getting the time

def system_hour(self, hour=''):

next_hour = time.strftime("%H")

if (next_hour != hour):

hour = next_hour

self.clock_hour.config(text=hour)

self.clock_hour.after(200, self.system_hour)

def system_min_sec(self, min_sec=''):

next_min_sec = time.strftime(":%M:%S")

if (next_min_sec != min_sec):

min_sec = next_min_sec

self.clock_min_sec.config(text=min_sec)

self.clock_min_sec.after(200, self.system_min_sec)

def system_date(self):

def system_date(self):

system_date = datetime.date.today()

self.date_frame.config(text=system_date.strftime("%A %d, %B %Y"))

self.date_frame.after(24*60*60*100, self.system_min_sec)

Spotify

Initialise Widget

The Spotify widget is made up of two sections. First is actually initialising the widget in the main block of code. This will set up the space for the song information and album art. However, the actual code that will interface with Spotify is housed in a separate .py file that we imported earlier.

######################

## Spotify ##

######################

'''

generates cells for the spotify widget

'''

def init_spotify_widget(self):

# Currently Playing Arist

self.spotify_artist = Label(self.master,

font = ('consolas', BASE_TEXT_SIZE*3),

bg='black',

fg='white')

self.spotify_artist.grid(in_=self.bottom_left,

row=0,

column=0,

rowspan=1,

columnspan = 2,

sticky="N")

# Currently Playing Album Art

try:

self.img = ImageTk.PhotoImage(Image.open('images/default_album_art.png'))

except:

self.img = ImageTk.PhotoImage(Image.open('images\default_album_art.png'))

self.spotify_art= Label(self.master,

image=self.img, border=0)

self.spotify_art.grid(in_=self.bottom_left,

row=1,

column=0,

rowspan=1,

columnspan = 1,

sticky="S")

# Currently Playing Song Title

self.spotify_track = Label(self.master,

font = ('consolas', BASE_TEXT_SIZE*3),

bg='black',

fg='white')

self.spotify_track.grid(in_=self.bottom_left,

row=3,

column=0,

columnspan = 1,

sticky="N")

# Currently Playing Progress

self.spotify_track_time = Label(self.master,

font = ('consolas', BASE_TEXT_SIZE*3),

bg='black',

fg='white')

self.spotify_track_time.grid(in_=self.bottom_left,

row=4,

column=0,

columnspan = 1,

sticky="S")

Refreshing Data

These are all the bones of the widget, but we haven't actually given them any content. This method will call the interface that we passed in when creating the SmartMirror class. All this method knows is that whatever player client it gets is going to return the current track information in json format.

def refresh_spotify(self, current_track_info=''):

# get new track info

next_track_info = self.player_client.get_current_track_info()

# convert track time into string from track info

track_time = f"{next_track_info['progress']}/{next_track_info['duration']}"

# check if track info has changed

if (next_track_info != current_track_info):

# update all track info

current_track_info = next_track_info

#limit track and artist name length

artist_name = '{name: ^20}'.format(name=current_track_info['artists'])

track_name = '{name: ^20}'.format(name=current_track_info['track'])

self.spotify_artist.config(text=artist_name[:20])

self.spotify_track.config(text=track_name[:20])

self.spotify_track_time.config(text=track_time)

# Update Album Art

# if track has art (returns None if no playback information)

if current_track_info['image'] != None:

response = requests.get(current_track_info['image']['url'])

image_bytes = BytesIO(response.content)

# must store reference to

self.spotify_album_art=ImageTk.PhotoImage(Image.open(image_bytes))

# if track doesn't have art (nothing playing or no art uploaded) display placeholder image

else:

self.spotify_album_art=self.img

# call again after 200 ms

self.spotify_art.config(image=self.spotify_album_art)

self.spotify_artist.after(200, self.refresh_spotify)

Abstract Interface

This is an abstract interface that we will base all our music clients off of.

import datetime import os from abc import ABC, abstractmethod import spotipy from spotipy.oauth2 import SpotifyOAuth DEBUG = False class AbstractPlayerInterface(ABC): @abstractmethod def get_current_track_info(self, debug=False): raise NotImplementedError

For testing purposes, we will create a dummy class that returns a placeholder song

class DummyMusicClient(AbstractPlayerInterface):

# overrides interface get_current_track method

def get_current_track_info(self, debug=False):

current_track_info = {'track': 'Numb',

'artists': 'Linkin Park',

'duration': '03:05',

'progress': '01:42',

'image': {'height': 300,

'url': 'https://i.scdn.co/image/ab67616d00001e02b4ad7ebaf4575f120eb3f193',

'width': 300}}

return current_track_info

Fetching Data from Spotify

To get data from Spotify, we need to make use of the Spotify APIs. We use a package called Spotipy to access the Spotify APIs. In order to use the APIs, you will need to have a Spotify Developer account and pass through a Client ID and Secret ID. These IDs need to be kept secret, otherwise anyone can make use of your developer account. To keep these secured, we are going to set them as environment variables which will not be uploaded to the repository. See here for setting your app settings, authorisation and using the Spotipy API. Make sure to whitelist your SPOTIPY_REDIRECT_URI otherwise Spotify will not let you redirect back to your application. Once you have provided the necessary environment variables and implemented the Spotipy authorisation manager, you will be taken to an online OAuth portal to grant access to your application. If you've configured everything correctly, it should save a token file that will be used for reauthenticating every hour, so you don't have to repeatedly log in.

class SpotifyClient(AbstractPlayerInterface):

# parameters necessary for creating a spotify client

# SPOTIPY_CLIENT_ID and SPOTIPY_CLIENT_SECRET are stored as environment variables, you must provide your own

SPOTIFY_GET_CURRENT_TRACK_URL = 'https://api.spotify.com/v1/me/player'

SCOPES = ['user-read-playback-state']

SPOTIPY_CLIENT_ID = os.getenv('SPOTIPY_CLIENT_ID')

SPOTIPY_CLIENT_SECRET = os.getenv('SPOTIPY_CLIENT_SECRET')

SPOTIPY_REDIRECT_URI = 'http://localhost:5000/'

def __init__(self):

self.sp = spotipy.Spotify(auth_manager=SpotifyOAuth(client_id=self.SPOTIPY_CLIENT_ID,

client_secret=self.SPOTIPY_CLIENT_SECRET,

redirect_uri=self.SPOTIPY_REDIRECT_URI,

scope=self.SCOPES))

def get_current_track_info(self, debug=False):

resp_json = self.sp.current_playback()

if resp_json != None:

artists = resp_json['item']['artists']

artists_name = ', '.join(

[artist['name'] for artist in artists]

)

duration = str(datetime.timedelta(seconds=resp_json['item']['duration_ms']//1000))

progress = str(datetime.timedelta(seconds=resp_json['progress_ms']//1000))

current_track_info = {

"track": resp_json['item']['name'],

"artists": artists_name,

"duration": datetime.datetime.strptime(duration, '%H:%M:%S').strftime('%M:%S'),

"progress": datetime.datetime.strptime(progress, '%H:%M:%S').strftime('%M:%S'),

"image": resp_json['item']['album']['images'][1]

}

else:

current_track_info = {

"track": '',

"artists": '',

"duration": '00:00',

"progress": '00:00',

"image": None

}

if DEBUG: print(current_track_info)

return current_track_info

Google Calendar

Google provides its own Python API for Google Calendar, and luckily there is a lot of documentation on it. You will need to follow Google's instructions for generating your credentials.json before you are able to use the Google OAuth portal to approve your application. Just like Spotify, this will save your details in a token so that it doesn't have to repeatedly reauthorise. Never share your credentials or tokens with anyone.

Google Client

from __future__ import print_function

import datetime

import os.path

from google.auth.transport.requests import Request

from google.oauth2.credentials import Credentials

from google_auth_oauthlib.flow import InstalledAppFlow

from googleapiclient.discovery import build

from googleapiclient.errors import HttpError

# If modifying these scopes, delete the file token.json.

SCOPES = ['https://www.googleapis.com/auth/calendar.readonly']

def get_calendar_events(num_events=10, debug=False):

"""Shows basic usage of the Google Calendar API.

Prints the start and name of the next 10 events on the user's calendar.

"""

creds = None

# The file token.json stores the user's access and refresh tokens, and is

# created automatically when the authorization flow completes for the first

# time.

if os.path.exists('token.json'):

creds = Credentials.from_authorized_user_file('token.json', SCOPES)

# If there are no (valid) credentials available, let the user log in.

if not creds or not creds.valid:

if creds and creds.expired and creds.refresh_token:

creds.refresh(Request())

else:

flow = InstalledAppFlow.from_client_secrets_file(

'credentials.json', SCOPES)

creds = flow.run_local_server(port=0)

# Save the credentials for the next run

with open('token.json', 'w') as token:

token.write(creds.to_json())

try:

service = build('calendar', 'v3', credentials=creds)

# Call the Calendar API

now = datetime.datetime.utcnow().isoformat() + 'Z' # 'Z' indicates UTC time

if debug: print(f'Getting the upcoming {num_events} events')

events_result = service.events().list(calendarId='primary', timeMin=now,

maxResults=num_events, singleEvents=True,

orderBy='startTime').execute()

events = events_result.get('items', [])

if not events:

print('No upcoming events found.')

return

# Prints the start and name of the next 10 events

if debug:

for event in events:

start = event['start'].get('dateTime', event['start'].get('date'))

print(start, event['summary'])

except HttpError as error:

print('An error occurred: %s' % error)

return events

Initialising Interface

We initialise the Google Widget the same as we did with Spotify. There are some differences though. As your calendar can contain confidential information, we will only display the calendar events when the facial recognition verifies your identity. So we have a separate method for fetching the calendar data and for displaying it: refresh_google_calendar() updates the self self.calendar_widgets list, while update_google_calendar() takes an authorised value to check whether to update the widget with the calendar information or hide it.

######################

## Google Calendar ##

######################

'''

Creates google calendar widget

'''

def init_google_calendar(self):

# weather widget is paired to clock widget

self.google_calendar = Label(self.master, text='Google Calendar',

font = ('Bebas Neue', BASE_TEXT_SIZE*5),

bg='black',

fg='white')

self.google_calendar.grid(in_=self.bottom_right,

row =0,

column = 1,

columnspan = 1,

sticky="NE")

self.calendar_events = []

self.calendar_widgets = []

for i in range(CALENDAR_EVENTS):

self.calendar_events.append(" ")

self.calendar_widgets.append(Label(self.master,

text=self.calendar_events[i],

font = ('Bebas Neue', BASE_TEXT_SIZE*3),

bg='black',

fg='white'))

self.calendar_widgets[i].grid(in_=self.bottom_right,

row =1+i,

column = 1,

columnspan = 1,

sticky="NE")

'''

Refresh Google Calendar data

'''

def refresh_google_calendar(self):

events = google_calendar.get_calendar_events(num_events=CALENDAR_EVENTS)

i = 0

for event in events:

start = event['start'].get('dateTime', event['start'].get('date'))

event_text = f"{start}: {event['summary']}"

self.calendar_events[i] = event_text

i += 1

self.calendar_widgets[0].after(10000, self.refresh_google_calendar)

'''

Update Google Calendar Widget

'''

def update_google_calendar(self, authorised: bool):

if authorised:

for i in range(CALENDAR_EVENTS):

self.calendar_widgets[i].config(text=self.calendar_events[i])

else:

for i in range(CALENDAR_EVENTS):

self.calendar_widgets[i].config(text="information hidden")

Weather Widget

The weather widget is the simplest of them all, with only one label. The refresh_weather() method takes advantage of f strings to format all three metrics into one text field, separated by line breaks.

######################

## Weather ##

######################

'''

Creates weather widget

'''

def init_weather(self):

# Weather Readings

self.weather_widget = Label(self.master,

font = ('Bebas Neue', BASE_TEXT_SIZE*3),

bg='black',

fg='white',

justify='left')

self.weather_widget.grid(in_=self.top_right,

row =0,

column = 3,

sticky="NE",

rowspan = 3)

def refresh_weather(self):

# get weather information from weather object

temperature = self.weather_interface.get_temperature()

humidity = self.weather_interface.get_humidity()

heat_index = self.weather_interface.calculate_heat_index(temperature=temperature, humidity=humidity, is_farenheit=False)

# format and display information

self.weather_widget.config(text=f"Temperature: \t{round(temperature)}°c\nHumidity: \t{round(humidity)}%\nHeat Index: \t {round(heat_index)}")

# update temperature every 15 minutes (controlled by the WEATHER_INTERVAL constant)

self.weather_widget.after(WEATHER_INTERVAL*1000, self.refresh_weather)

The actual implementation of the weather interface though is more complex. We create another abstract class so that we can create a dummy model, or change to an online weather service at a later date. We include concrete implementations to calculate the heat index and convert from celsius to Fahrenheit, this way all our implementations will have access to these methods.

Abstract Interface

from abc import ABC, abstractmethod import math import random from widgets import thingspeak DEBUG = False ''' Abstract class for fetching temperature and humidity. ''' class AbstractWeatherInterface(ABC): @abstractmethod def get_temperature(self) -> float: ''' Fetch the temperature ''' raise NotImplementedError @abstractmethod def get_humidity(self) -> float: ''' Fetch the humidity ''' raise NotImplementedError def convert_to_f(self, temp_c: float) -> float: temp_as_f = temp_c * 1.8 + 32 return temp_as_f def calculate_heat_index(self, temperature: float, humidity: float, is_farenheit: bool) -> float: ''' Calculate the heat index based on temperature and humidity ''' heat_index = 0 if (not is_farenheit): temperature = self.convert_to_f(temperature) heat_index = 0.5 * (temperature + 61.0 + ((temperature - 68.0) * 1.2) + (humidity * 0.094)) if (heat_index > 79): heat_index = (-42.379 + 2.04901523 * temperature + 10.14333127 * humidity + -0.22475541 * temperature * humidity + -0.00683783 * pow(temperature, 2) + -0.05481717 * pow(humidity, 2) + 0.00122874 * pow(temperature, 2) * humidity + 0.00085282 * temperature * pow(humidity, 2) + -0.00000199 * pow(temperature, 2) * pow(humidity, 2)) if ((humidity < 13) and (temperature >= 80.0) and (temperature <= 112.0)): heat_index -= ((13.0 - humidity) * 0.25) * math.sqrt((17.0 - abs(temperature - 95.0)) * 0.05882) elif ((humidity > 85.0) and (temperature >= 80.0) and (temperature <= 87.0)): heat_index += ((humidity - 85.0) * 0.1) * ((87.0 - temperature) * 0.2) return heat_index

Our Dummy interface just chooses a random value for temperature and humidity.

''' Dummy interface for testing module without connecting hardware, generates random temperature and humidity. Super class convert to Farenheit and calculates heat index ''' class DummyWeather(AbstractWeatherInterface): def get_temperature(self) -> float: ''' Fetch the temperature, overrides interface method ''' temperature = random.random() * random.randint(1, 40) return temperature def get_humidity(self) -> float: ''' Fetch the humidity, overrides interface method ''' humidity = random.random()*100 return humidity def convert_to_f(self, temp_c) -> float: return super().convert_to_f(temp_c) def calculate_heat_index(self, temperature: float, humidity: float, is_farenheit: bool) -> float: return super().calculate_heat_index(temperature, humidity, is_farenheit)

Fetching Data from the Cloud

To fetch data from ThingSpeak we create another concrete implementation, but we will need to create a method to actually interpret the data.

''' Reads data from ThingSpeak channel, which is published by the Particle Argon reading ''' class ThingSpeakWeather(AbstractWeatherInterface): def get_temperature(self) -> float: ''' Fetch the temperature, overrides interface method ''' temperature = float(thingspeak.read_feeds(1)[0]['field1']) if DEBUG: print(temperature) return temperature def get_humidity(self) -> float: ''' Fetch the humidity, overrides interface method ''' humidity = float(thingspeak.read_feeds(1)[0]['field2']) ## believe it or not, humidity can go above 100%. It really shouldn't in this example though, other than due to sensor error # if humidity > 100: humidity = 100 if DEBUG: print(humidity) return humidity def convert_to_f(self, temp_c) -> float: return super().convert_to_f(temp_c) def calculate_heat_index(self, temperature: float, humidity: float, is_farenheit: bool) -> float: return super().calculate_heat_index(temperature, humidity, is_farenheit)

We create another class to fetch the data in json format. Once again, we use environment variables to store and retrieve our API keys. This method can retrieve a number of feeds based on the feeds value. This could be used to get an average reading over an hour for example, or to see the change in weather over the day.

import requests

import os

# READ_API_KEY=os.getenv('THINGSPEAK_READ_API_KEY')

# CHANNEL_ID=os.getenv('THINGSPEAK_CHANNEL_ID')

READ_API_KEY = os.getenv('READ_API_KEY')

CHANNEL_ID = os.getenv('CHANNEL_ID')

def read_feeds(feeds: int) -> list:

resp_json = requests.get(

url=f"https://api.thingspeak.com/channels/{CHANNEL_ID}/feeds.json?api_key={READ_API_KEY}&results={feeds}").json()

feeds = []

for feed in resp_json['feeds']:

feeds.append({

"created_at": feed['created_at'],

"entry_id": feed['entry_id'],

"field1": feed['field1'],

"field2": feed['field2']

})

return feeds

Facial Recognition

Greeting

Creating a new widget should be very familiar by now. The greeting widget uses the identifier we passed when creating the SmartMirror class to determine the identity of the user.

######################

## Greeting ##

######################

'''

Initialise greeting widget

'''

def init_greeting(self):

# create greeter interface

self.greeter = identity.GeneralGreeting()

# create widget

self.greeting = Label(self.master,

font = ('Bebas Neue', BASE_TEXT_SIZE*9),

bg='black',

fg='white')

self.greeting.grid(in_=self.middle_middle,

row =0,

column = 0,

columnspan = 3)

def refresh_greeting(self):

# get user identity

identity = self.identifier.get_identity()

# if identified person is intended user, greet them

if identity == self.user:

self.greeting.config(text=self.greeter.greeting(identity))

# reveal calendar

authorised = True

else:

# otherwise hide calendar information

self.greeting.config(text="Unknown User\nSensitive Information Hidden")

# hide google calendar

authorised = False

self.update_google_calendar(authorised=authorised)

self.greeting.after(IDENTITY_CHECK_INTERVAL * 1000, self.refresh_greeting)

As usual, we have a dummy implementation to test our interface.

from abc import ABC, abstractmethod import random #from widgets import facial_recognition ''' Abstract Identification Interface ''' class AbstractIdentifier(ABC): @abstractmethod def get_identity(self) -> str: raise NotImplementedError ''' Dummy facial recognition for testing ''' class DummyFacialRecognition(AbstractIdentifier): def __init__(self, indetity: str) -> None: super().__init__() self.identity = indetity def get_identity(self) -> str: check = random.random() if check < 0.1: return "unknown" else: return self.identity

We also create a second interface for generating greetings. You could create different variations of concrete implementations for different styles of greetings i.e. humorous, different languages etc.

''' Abstract Greeting Interface ''' class AbstractGreeting(ABC): @abstractmethod def greeting(self) -> str: raise NotImplemented ''' Concrete greeting implementation ''' class GeneralGreeting(AbstractGreeting): greetings = [ "Welcome back, ", "Hello, ", "Good to see you, ", "Hello there, "] def greeting(self, identity: str) -> str: choice = random.randint(0, 3) greeting = self.greetings[choice] + identity return greeting

Our final concrete implementation will handle facial recognition. To actually process the facial recognition we will need another interface.

''' Concrete facial recognition implementation ''' class FacialRecognition(AbstractIdentifier): def __init__(self, indetity: str) -> None: super().__init__() self.identity = indetity #self.model = facial_recognition.facial_recognition(user=self.identity) def get_identity(self) -> str: identity = self.model.identify_user() return identity

Implementing Facial Recognition

Core Electronics has a great guide on using Open CV for facial recognition. This implementation is based on the code provided there, but adapted to not show a picture and process less frames for performance reasons. It also has a hard limit on the amount of frames it will process before giving up until the next check. As soon as the method confirms the identity of the user it returns their name as a string, which is used in the refresh_greeting method to compare against the users name.

Face Recognition

# import the necessary packages

from imutils.video import VideoStream

import face_recognition

import imutils

import pickle

import time

import cv2

MAX_FRAMES = 15

class facial_recognition():

def __init__(self, user: str) -> None:

self.user = user

# exploit previously trained model

try:

self.data = data = pickle.loads(open("widgets/facial_recognition_main/encodings.pickle", "rb").read())

except:

self.data = data = pickle.loads(open("\widgets\facial_recognition_main\encodings.pickle", "rb").read())

def identify_user(self) -> str:

# initialise video stream with extremely low frame rate to save processing power

video_stream = VideoStream(src=0,framerate=5).start()

frames = 0

# initialisingcurrent name to unknown means that only identified faces will trigger reaction

currentname = "Unknown"

# loop over frames from the video file stream until we find a face, or time out

while frames < MAX_FRAMES:

try:

# grab the frame from the threaded video stream and resize it

# to 500px (to speedup processing)

frame = video_stream.read()

frame = imutils.resize(frame, width=500)

# Detect the face boxes

boxes = face_recognition.face_locations(frame)

# compute the facial embeddings for each face bounding box

encodings = face_recognition.face_encodings(frame, boxes)

names = []

matches = ''

# loop over the facial embeddings

for encoding in encodings:

# attempt to match each face in the input image to our known

# encodings

matches = face_recognition.compare_faces(self.data["encodings"], encoding)

name = "Unknown" # if face is not recognized, then print Unknown

# check to see if we have found a match

if True in matches:

# find the indexes of all matched faces then initialize a

# dictionary to count the total number of times each face

# was matched

matchedIdxs = [i for (i, b) in enumerate(matches) if b]

counts = {}

# loop over the matched indexes and maintain a count for

# each recognized face face

for i in matchedIdxs:

name = self.data["names"][i]

counts[name] = counts.get(name, 0) + 1

# determine the recognized face with the largest number

# of votes (note: in the event of an unlikely tie Python

# will select first entry in the dictionary)

name = max(counts, key=counts.get)

if name == self.user:

currentname = name

break

#If someone in your dataset is identified, print their name on the screen

if currentname != name:

currentname = name

except:

break

cv2.destroyAllWindows()

video_stream.stop()

print("Done.")

return currentname

Training the Model

We cheat a little bit in training the model by using a different python program to capture images and train the model. In fact, the method used is exactly the same as in the Core Electronics tutorial! The necessary files are included in the github repository. Just navigate to widgets/facial_recognition_main. run headshots.py to capture images of yourself and train_model.py to generate the encodings.pickle file that will be used for facial recognition in the main program. Ensure that the encodings.pickle file is located in the correct directory: \widgets/facial_recognition_main/encodings.pickle.

Finalise Application

All the main code is out of the way now except for, well, the main method. Here we will create our root TkInter object and create the implementations that we will use, either the dummy or concrete classes.

Dummy Methods

###################### ## Main ## ###################### if __name__=="__main__": root = Tk() user = "Josh" identifier = identity.DummyFacialRecognition(user) player_client = player.DummyMusicClient() weather_interface = weather.DummyWeather() smart_gui = SmartMirror(root, user=user, identifier=identifier, player_client=player_client, weather_interface=weather_interface) root.mainloop()

Real Methods

###################### ## Main ## ###################### if __name__=="__main__": root = Tk() user = "Josh" identifier = identity.FacialRecognition(user) player_client = player.SpotifyClient() weather_interface = weather.ThingSpeakWeather() smart_gui = SmartMirror(root, user=user, identifier=identifier, player_client=player_client, weather_interface=weather_interface) root.mainloop()

Now you can connect your camera module to the Raspberry Pi. If using a Raspberry Pi camera module, follow the instructions in the Core Electronics guide. If using a webcam, simply connect it over USB. Ensure you have your raspberry pi connected to a monitor and run your code! If you've followed the above steps correctly, you should see a similar image to the one above! Congratulations on building your smart mirror! However, there is one more step we can do!

Bonus: Particle Argon Module

The Smart Mirror is intended to be used alongside local modules that upload data to the cloud (e.g. through ThingSpeak).

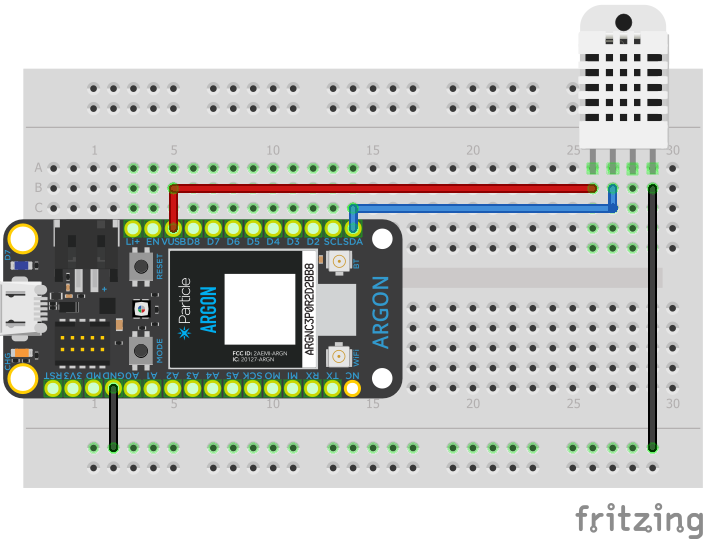

Connect the AM2302 to the Particle Argon as follos:

- VDO > VUSB (provides 5v of power is powered over USB)

- NC

- SDA > D2

- GND > GND (Ground to Ground)

The following code will take a reading from the AM2302 every 15 seconds, but only push an update to ThingSpeak every minute. As the sensor is inconsistent and sometimes provided null readings, only the maximum reading over that minute is sent to the cloud, reducing the chance of null values being sent. If all 4 readings in that minute are null values, then it is possible to still publish incorrect information, but there is a much lower chance. This code publishes directly to ThingSpeak without using Particle Publish events. This means it is very easy to substitute the Particle Argon with something like an Arduino. Make sure to copy your channel number and API key from your ThingSpeak Channel page.

#include <ThingSpeak.h>

#include <Adafruit_DHT.h>

#define DHTPIN D2 // what pin we're connected to

#define DHTTYPE DHT22 // DHT 22 (AM2302)

SYSTEM_THREAD(ENABLED);

TCPClient client;

// starting values

unsigned long startTime = 60000; // set to 1 minute to give an extra minute to get a starting value

float maxTemp = 0.0;

float maxHum = 0.0;

unsigned long myChannelNumber = *******; // change this to your channel number

const char * myWriteAPIKey = "************"; // change this to your channels write API key

// create DHT object

DHT dht(DHTPIN, DHTTYPE);

// setup() runs once, when the device is first turned on.

void setup() {

ThingSpeak.begin(client);

Serial.begin(9600);

dht.begin();

}

// loop() runs over and over again, as quickly as it can execute.

void loop() {

delay(15000);

unsigned long elapsedTime = millis() - startTime;

// read temp and humidity

float temperature = dht.getTempCelcius();

float humidity = dht.getHumidity();

// print to serial monitor, just for debugging

Serial.println(String::format("Temperature: %f°c", temperature));

Serial.println(String::format("Humidity: %f%%", humidity));

if ( temperature > maxTemp) { maxTemp = temperature; }

if ( humidity > maxHum) { maxHum = humidity; }

// Write maximum value over previous minute to ThingSpeak

// after 1 minute has elapsed

if ( elapsedTime >= 60000 ){

// assign readings to thingspeak fields

ThingSpeak.setField(1, maxTemp);

ThingSpeak.setField(2, maxHum);

// reset maximums

maxTemp = 0;

maxHum = 0;

// write data to thingspeak channel

ThingSpeak.writeFields(myChannelNumber, myWriteAPIKey);

startTime = millis();

}

}

Enjoy your smart mirror!

You’ve now completed all the code and hardware for your smart mirror! All that’s left is to turn it into a mirror.

This tutorial was done as part of my SIT210 Embedded Systems Development Class, but I hope to expand it further. Thank you for following along with my tutorial, any feedback is much appreciated.

The code for this project can be found at https://github.com/coskun-kilinc/SIT210-Project-Smart-Mirror. Please feel free to use it and share what you come up with!