Mars Roomba

This Instructable will guide you in the directions of operating a Raspberry Pi controlled Roomba vacuum bot. The operating system we will be using is through MATLAB.

Supplies

What you will need to gather in order to carry out this project:

- iRobot's Create2 Roomba vacuum cleaner bot

- Raspberry Pi

- Raspberry Pi Camera

- The latest version of MATLAB

- The Roomba install toolbox for MATLAB

- MATLAB application for a cellular device

The Problem Statement

We were tasked to use MATLAB to develop a rover that could be used on Mars in order to assist scientists in gathering planet data. The functions that we addressed in our project were of remote control, object impact recognition, water recognition, life recognition, and image processing. To achieve these feats, we coded using the Roomba toolbox commands to manipulate the many functions of iRobot's Create2 Roomba.

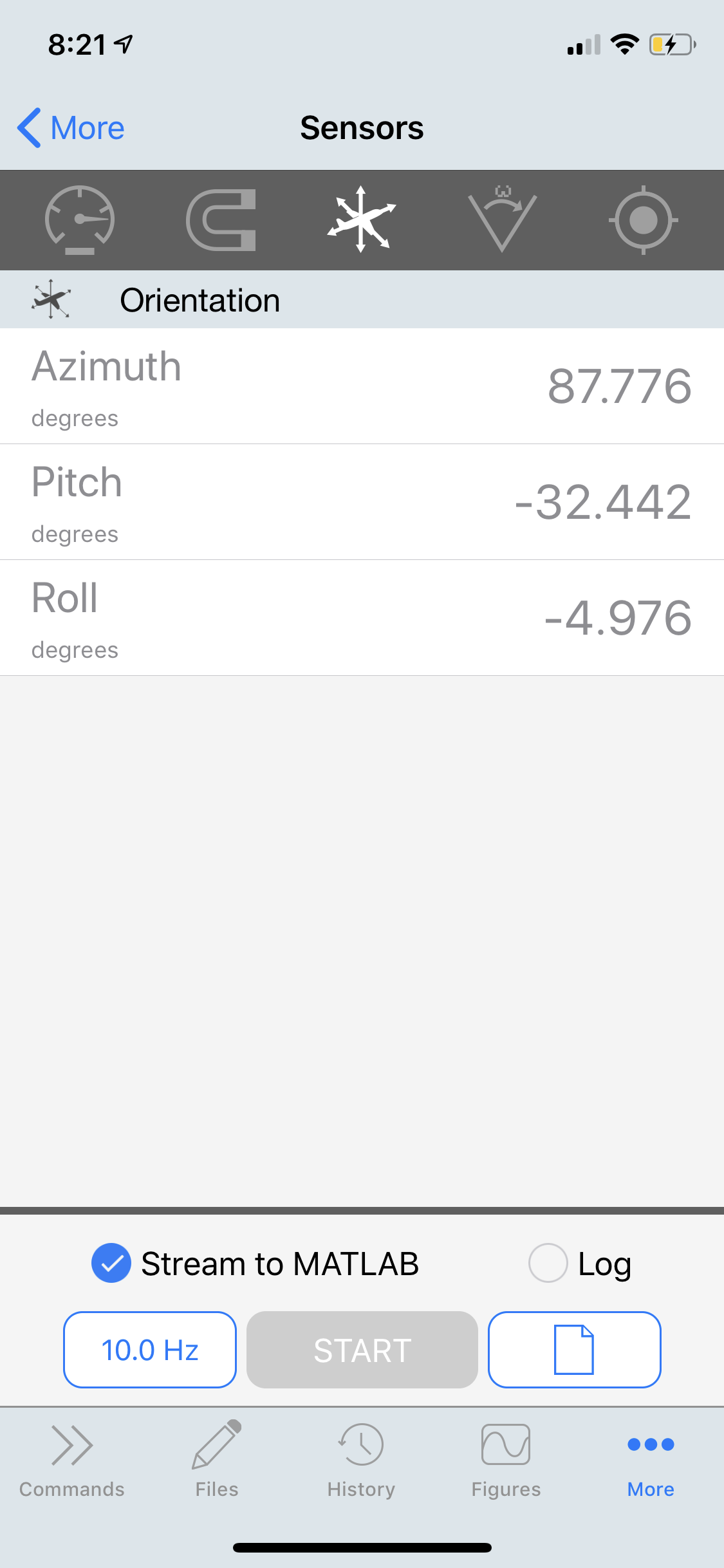

Bluetooth Remote Control

This slide will walk-through the code to control the Roomba's movement using the Bluetooth capabilities of your smartphone device. To begin, download the MATLAB application to your smartphone and log in to your Mathworks account. Once logged in, go to "more", "settings", and connect to your computer using its IP address. Once connected, go back to "more" and select "sensors." Tap onto the third sensor on the top toolbar of the screen, and tap onto start. Now, your smartphone is a remote control!

The code is as follows:

while 0 == 0

pause(.5)

PhoneData = M.Orientation;

Azi = PhoneData(1);

Pitch = PhoneData(2);

Side = PhoneData(3);

bumps = r.getBumpers;

if Side>80 || Side<-80

r.stop

r.beep('C,E,G,C^,G,E,C')

break

elseif Side>20 && Side<40

r.turnAngle(-5);

elseif Side>40

r.turnAngle(-25);

elseif Side<-20 && Side>-40

r.turnAngle(5);

elseif Side<-40

r.turnAngle(25);

end

if Pitch >10 && Pitch<35

r.moveDistance(.03)

elseif Pitch>-35 && Pitch<-10

r.moveDistance(-.03)

end

end

Impact Recognition

Another function that we implemented was to detect the impact of the Roomba into an object and then correct its current path. To do this, we had to use conditionals with the readings from the bumper sensors to determine if an object was struck. If the robot hits an object, it will back up .2 meters, and rotate at an angle determined by which bumper was struck. Once an item has been hit, a menu pops up displaying the word "oof."

The Code is shown below:

while 0 == 0

bumps = r.getBumpers;

r.setDriveVelocity(.1)

if bumps.left == 1

msgbox('Oof!');

r.moveDistance(-0.2)

r.setTurnVelocity(.2)

r.turnAngle(-35)

r.setDriveVelocity(.2)

elseif bumps.front == 1

msgbox('Oof!');

r.moveDistance(-0.2)

r.setTurnVelocity(.2)

r.turnAngle(90)

r.setDriveVelocity(.2)

elseif bumps.right == 1

msgbox('Oof!');

r.moveDistance(-0.2)

r.setTurnVelocity(.2)

r.turnAngle(35)

r.setDriveVelocity(.2)

elseif bumps.leftWheelDrop ==1

msgbox('Oof!');

r.moveDistance(-0.2)

r.setTurnVelocity(.2)

r.turnAngle(-35)

r.setDriveVelocity(.2)

elseif bumps.rightWheelDrop ==1

msgbox('Oof!');

r.moveDistance(-0.2)

r.setTurnVelocity(.2)

r.turnAngle(35)

r.setDriveVelocity(.2)

end

end

Life Recognition

We coded a life recognition system to read the colors of objects in front of it. The three kinds of life that we coded for are plants, water, and aliens. To do this, we coded the sensors to calculate the average values of red, blue, green, or white. These values were compared to the thresholds that were manually set to determine the color that the camera is looking at. The code would also plot the path to the object and creating a map.

The code is as follows:

t = 10;

i = 0;

while t == 10

img = r.getImage; imshow(img)

pause(0.167)

i = i + 1;

red_mean = mean(mean(img(:,:,1)));

blue_mean = mean(mean(img(:,:,3)));

green_mean = mean(mean(img(:,:,2)));

white_mean = (blue_mean + green_mean + red_mean) / 3; %want this val approx 100

nine_plus_ten = 21;

green_threshold = 125;

blue_threshold = 130;

white_threshold = 124;

red_threshold = 115;

while nine_plus_ten == 21 %green - life

if green_mean > green_threshold && blue_mean < blue_threshold && red_mean < red_threshold

r.moveDistance(-.1)

a = msgbox('possible life source found, location plotted');

pause(2)

delete(a)

[y2,Fs2] =audioread('z_speak2.wav');

sound(y2,Fs2)

pause(2)

%plant = r.getImage; %imshow(plant);

%save('plant_img.mat', plant');

%plot location in green

i = 5;

break

else

nine_plus_ten = 19;

end

end

nine_plus_ten = 21;

while nine_plus_ten == 21 %blue - woder

if blue_mean > blue_threshold && green_mean < green_threshold && white_mean < white_threshold && red_mean < red_threshold

r.moveDistance(-.1)

a = msgbox('a source of water has been found, location plotted');

pause(2)

delete(a)

[y3,Fs3] =audioread('z_speak3.wav');

sound(y3,Fs3);

%woder = r.getImage; %imshow(woder)

%save('water_img.mat',woder)

%plot location in blue

i = 5;

break

else

nine_plus_ten = 19;

end

end

nine_plus_ten = 21;

while nine_plus_ten == 21 %white - aliens monkaS

if white_mean > white_threshold && blue_mean < blue_threshold && green_mean < green_threshold

[y5,Fs5] =audioread('z_speak5.wav');

sound(y5,Fs5);

pause(3)

r.setDriveVelocity(0,.5)

[ys,Fss] =audioread('z_scream.mp3');

sound(ys,Fss);

pause(3)

r.stop

% alien = r.getImage; %imshow(alien);

% save('alien_img.mat', alien);

i = 5;

break

else

nine_plus_ten = 19;

end

end

if i == 5

a = 1; %turns angle

t = 9; %terminate big loop

i = 0;

end

end

Run It!!

After all the code has been written, combine it all into one file and voila! Your Roomba bot will now be fully functional and operate as advertised! However, the Bluetooth control should either be in a separate file or separated from the rest of the code with %%.

Enjoy using your robot!!