How to Use a Computer Cluster

Cluster computing is a useful resource offered by many universities and other organizations. Essentially, it's a system that allows you to run programs on a powerful computer system with many processors. This is valuable if you are running code that is difficult or slow to run, as you could run it on the cluster instead of on your own computer. However, understanding cluster computing can be daunting, so this guide will explain how to use it.

Supplies

This guide will assume that the cluster computing service you are using uses the Slurm workload manager, as it's the most common one. Other specifics may depend on your system; your organization should provide a wiki with a "Getting started" page describing some basics.

You will need downloaded:

- Code that you'd like to run on the cluster

- Notepad++ or a similar source code editor

- File transferring protocol (depends on the system)

- If using personal Wi-Fi, your organization's VPN client

Setting Up Files

Identify what file transfer protocol your organization uses. For example, I have used a cluster at Virginia Tech that simply handles file upload/download through their own website, while at VCU, I used FileZilla, a simple file transferring application. You may also need to set up an account through the cluster; some clusters automatically create accounts for all students and staff, while others require you to request an account.

Let's say you're interested in running a script (eg. from R, Python, or MATLAB). You will need to upload this file to the cluster, along with any supporting files/data that the script uses to run.

You will not need to upload the program (eg. RStudio) itself. Just the script is fine. Your organization will already have many common software packages installed on the cluster.

Note: a cluster works by having many different "nodes" (processors) that connect to one communal file storage and are accessed through one or a few login points. So, if you upload one file, all of the nodes will have access to it.

Logging in to the Cluster

If you are using Wi-Fi that isn't your organization's, you'll need to connect to your organization's servers through their VPN client. Then:

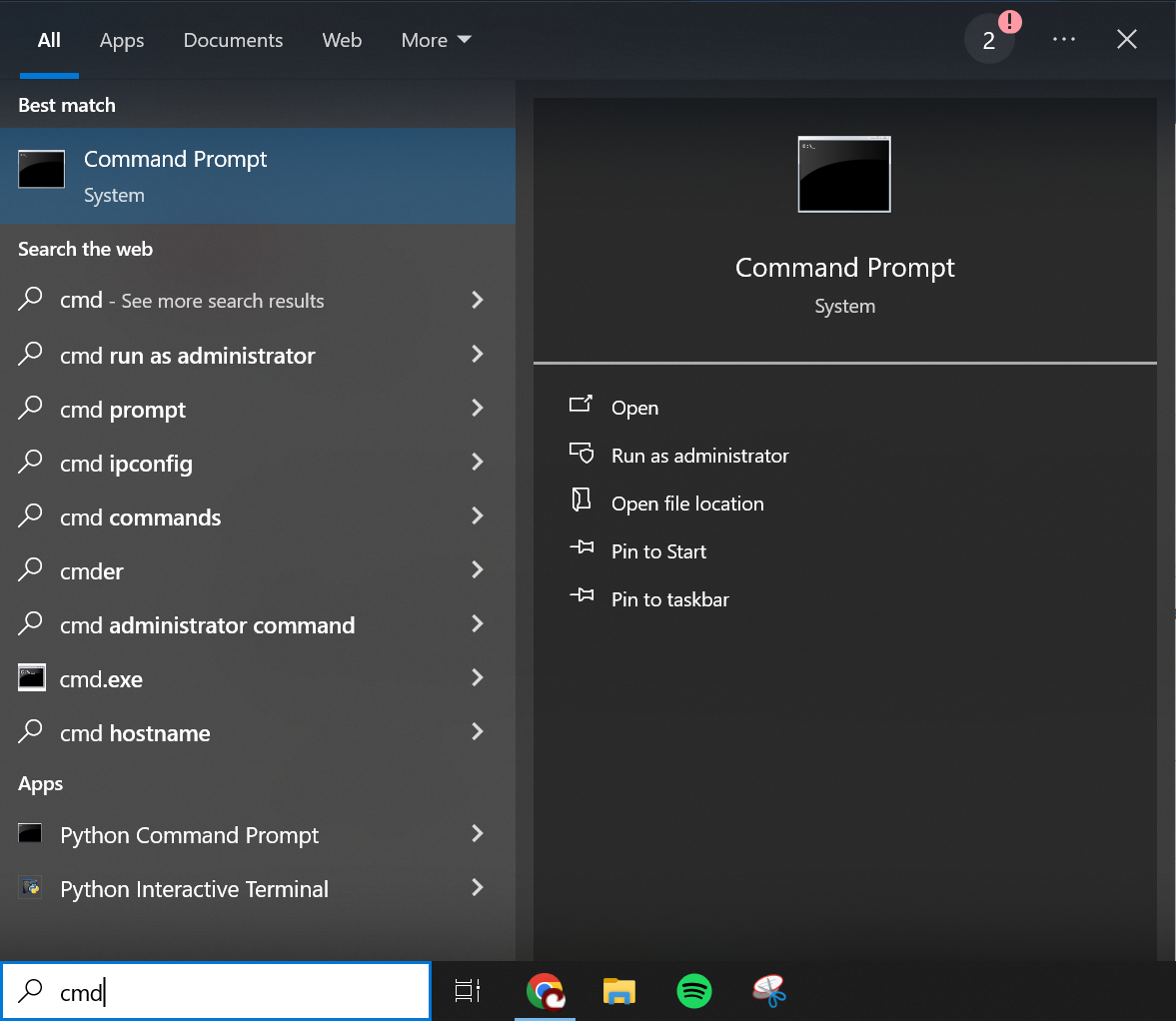

- Open Command Prompt (type "cmd" into the Windows search bar to find it)

- Type "ssh [your username]@[cluster extension]" and hit enter

For example, for the user chriss04 on Virginia Tech's TinkerCliffs cluster, I would type "ssh chriss04@tinkercliffs1.arc.vt.edu". The extension will depend on your system.

- Type your user password (once prompted) and hit enter

You should now be logged in to the cluster. If it worked, you will should see your username on the left side of new lines. From here you can submit "jobs," which are tasks that the cluster will perform. Let's see how to do that.

Job Submission Scripts

Most clusters use "Slurm," a Linux-based resource manager which processes the user requests and allocates computer resources. To communicate with Slurm, you will need to write a "shell script" in a language called Bash. It's easier than it sounds!

Open Notepad++ or your source code editor.

- Click "Edit," then "EOL Conversion," then "Unix (LF)"

The reason for this is that the hidden character that signifies a new line is different on Windows and Linux, so the Linux-based system wouldn't be able to read your code properly if you are using the default Windows end-of-line character.

Let's go ahead and name your file as well.

- Click "File," then "Rename"

- Type "[your script name].sh" and click "OK"

Your file will now have the "SH" extension, which signifies shell scripts.

Writing the Script

Let's look at what code the script actually contains. I'll provide an example (running an R script) and explain it line-by-line.

#!/bin/bash

#SBATCH --account=personal

#SBATCH --job-name R_job

#SBATCH --output R_job_output.log

#SBATCH --error R_job_error.log

#SBATCH --cpus-per-task 1

#SBATCH --mem 4G

module load R/4.3.1

Rscript example_script.R

- All Bash scripts begin with "#!/bin/bash." The subsequent lines begin with "#SBATCH" and contain information on what resources are requested. The final lines contain information on what task should be performed.

- Some clusters have an "allocation" system, where each user is given a certain amount of processing hours, and the user must debit those resources from their account. Not all organizations do this; Virginia Tech does, while VCU doesn't. Here I am using my personal account, but you might use your lab's account if you are part of a research lab.

- This names the job. You'll see this name if you check your job's status later.

- This names the output log file.

- This names the error log file. This and the previous line are not strictly necessary. If excluded, the system will automatically create a name for the file (typically a long series of numbers).

- This is the number of processors the job will use. Unless you are doing parallel computing, leave it at 1. You can additionally write "#SBATCH --gpus-per-task 1" if you would like to use a graphics processor; again, this is not necessary for many kinds of computing. If not written, no GPU resources will be allocated.

- This is the amount of RAM (memory) allocated to the task, in gigabytes. If too low, the task may fail. If too high, you are wasting excess computing resources. 4GB is a moderately low amount.

- This will load R, version 4.3.1. Check what version of the program your cluster has installed and use that version number.

- Now that R is open, the cluster will then run an R script named "example_script.R."

These are just some basic options. There are more commands that can be done with "#SBATCH." Also, you can write more complex shell scripts that contain for loops, automating the submission of many jobs with just one script. That is beyond the scope of this guide, but I recommend exploring Stack Overflow for examples and ChatGPT for help writing the code.

Submitting the Script

Now that your script is written, save it ("File" then "Save").

- Upload it to the cluster as you did in Step 1

- Open the command line from Step 2

- Type "sbatch [your script name].sh"

If your script is written properly, it should respond with "Submitted batch job [number]."

- Type "squeue -u [username]"

If your job is running, it will give some information like the name of the job and how long it's been running. Keep checking on it periodically until no jobs are listed (this means they have finished). In the example image, I used a script that submits 5 jobs, so I should see 5 jobs running, and 0 once they have finished.

From here, you can download the outputs of your program from the file transfer system in Step 1. Voila! Hopefully it worked. If not, check the text in the error file and see if you can troubleshoot.

Once you're done using the cluster, delete any files from its storage system so as to not waste space.