Climbing to New Heights: a Novel Device for Elevation Detection and Navigation for the Blind

by ShreyaMajumdar22 in Circuits > Assistive Tech

530 Views, 2 Favorites, 0 Comments

Climbing to New Heights: a Novel Device for Elevation Detection and Navigation for the Blind

Introduction

Blindness is a disability in which individuals suffer from partial or total loss of vision. These include color blindness, total blindness, and others. An individual is legally blind in the United States if their vision is in any eye 20/200 or worse, or if the individual’s range of vision is smaller than 20 degrees (Zuckerman, 2004). Blindness is an issue that affects a large portion of the population, and its effects are increasing. As of 2020, 295 million people suffer from visual impairments such as loss of vision, blindness, and others. Of these 295 million, approximately 43.3 million of those individuals are blind (Bourne, R., Steinmetz, J. D., Flaxman, et al., 2020). Statistically, blindness rates increase with age (CDC, 2020). American adults who are blind or visually impaired struggle with many everyday tasks, including navigation. This is especially of in new environments, where the person encounters both the physical and social obstacles.

Our client—who is also a client at our partner, Seven Hills Foundation, in Worcester, MA—has expressed their personal struggles in navigating hallways within the Seven Hills facility. These activities become especially difficult when they are in an area with high amounts of people. The client identified two types of physical obstacles that he regularly faced: horizontal changes and vertical changes. A horizontal change was identified to be when the surface that they are walking on does not change in elevation but does shift in material (e.g. walking from hardwood flooring to a rug), and vertical surface changes were defined to be a vertical shift in the surface being traveled on (e.g. sidewalk to road). Vertical changes in elevation were expressed to be especially difficult for the client because they are challenging to anticipate and can cause them to trip (Client, personal communication, 2021).

Target Audience

This device is intended to provide our client with a method by which to navigate stairs and other sudden elevation changes as they are walking. While some of the styling was tailored to the client's interests, this aspect can be changed to cater towards the general population of people who are legally blind to help them with this navigational challenge.

Intended Device

Our group has built a camera based-wearable device that can alert the user when they are approaching it a sudden change in elevation.

Supplies

Here is the list of supplies we used throughout in making the device:

Raspberry Pi Camera Module (2 Megapixels) - $6.99 (1)

Raspberry Pi Model 3B+ - $20.00 (1)

YOLOv5 Object Detection Algorithm - $0.00 (1)

Raspberry Pi 4 Battery Pack UPS - $23.99 (1)

Piezo Buzzer - $6.99 (1)

Lanyard - Approx. $10.00 (1)

ABS 3D Printing Filament - $18.99/lb. (1)

All items are available at www.amazon.com.

Tools used during project:

SolidWorks

GrabCAD

Visual Studio Code

Ruler

Screwdriver

Wrench

Competitor Analysis

Competitor #1: Intel-Sponsored Backpack (image on left)

The first competitor analyzed was a backpack developed recently by a student at the University of Georgia. The device features a laptop placed into a backpack coupled with a vest with a camera inside, a waist pack, and a bluetooth earpiece. Using AI-powered cameras, the device is able to help the user gain awareness of elevation changes of curbs, crosswalks, people, changes in traffic lights, and other objects such as trash cans. All instructions are provided to the user via bluetooth-powered headphones. Additionally, the device offers a novel command that allows the device to inform the user of what object surrounds them, along with their corresponding clock positions relative to their bodies (Alexiou, 2021).

The developer of the device claims that most devices that function in a similar way are much bigger and heavier. This makes the device a significant improvement upon most cumbersome models. Additionally, the device is incredibly conspicuous; backpacks and headphones are commonly-worn among the general population, so there is much less suspicion of an individual being blind and receiving help, which eliminates the embarrassment that the client expressed (Alexiou, 2021).

The device has been praised by various news companies and has won the OpenCV Spatial AI 2020 competition. Although the device indeed provides hope for the future of blindness assistive technology, it fails to successfully take into account the fact that it cannot readily detect a wide range of surface changes due to its design, making it challenging for the individual to successfully overcome stairs and other vertical changes in surface. Additionally, although it offers more specific communication than a guide dog, the device provides no emotional support compared to that of a dog (Alexiou, 2021).

Competitor #2: Lazzus (image on top right)

The second major competitor currently available on the market is an app developed by Nuevo Sentido Tecnológico Realidad Aumentada Sociedad Limitada, a company based in Spain. Many devices have been developed to try and help out the visually impaired, but they are mostly just technologies that help the user get from Point A to Point B and don’t put much focus into the many obstacles that this trip entails. Thus, the device aims at helping the user do just that. Via audio cues, Lazzus is a free mobile application that the user can become aware of the outdoor furniture that exists within the vicinity of the user (Nuevo Sentido Tecnológico Realidad Aumentada Sociedad Limitada, 2019).

Although it has proved to be highly effective for most blind users and trusted by many, Lazzus fails to include the feature that allows users to navigate without the use of the phone inside of their pockets, which can increase productivity because it allows users to navigate their environments with a free hand, which increases convenience for this as well. As well as this, the device is only effectively designed for those who are completely blind, so the device does not fit into the criteria to serve the need for as many visually-impaired individuals as possible (Nuevo Sentido Tecnológico Realidad Aumentada Sociedad Limitada, 2019).

Competitor #3: WeWALK (image on middle right)

White canes do tell you what is on the ground but they never can tell you what’s going on above that (especially near the torso or the head). The device discussed here, WeWALK, utilizes an ultrasonic sensor which helps individuals to know what they’re passing and when they’re passing it. It also uses both a GPS and voice technology to inform the users of what is going on. When a dangerous object is detected, a vibration is given to the user so that they are aware that there is an object. One clear flaw of this system is that it doesn’t describe what kind of object that is, just the fact that there is an object. The device can also connect with Google Maps and the corresponding directions are read to the user as they begin to walk to their destination. Another main flaw of this device is that it costs at least $500.00. Although this is expensive, the article claims that it is a lot cheaper than most devices. All in all, this device seems to be rather effective for many users, but there are a lot of obvious improvements that can be made (Matchar, 2019).

Competitor #4: Aira (image on bottom right)

Aira is an app that has been helping visually-impaired individuals navigate for years. It is an app that connects users to Aira agents that guide them through their everyday lives. These agents are both trained and authenticated so that the user can enjoy the best experience possible to be as successful as possible. Users are guaranteed access to someone who is their friend or family member so that the user is as comfortable as possible (Dabney, 2015). The device has claimed to be capable of informing the user of what the traffic levels are on the street, able to recognize faces that are inputted into the system, usually those that are familiar to the user. The device is able to take the user’s personal information into consideration such as medical history, hobbies, and other aspects (Dabney, 2015).

Confidentiality is a central value of the application, so there will likely be a lack of embarrassment for users like the client. A challenge of the device is that it does not function well for users that are using it without an internet connection, and costs of the app can be upwards of $300.00 monthly, which is not preferred for the user. Lastly, the device again is unable to detect precise changes in surfaces that allow the user to avoid tripping and respective injuries (Dabney, 2015).

Our Approach

This device is intended to provide our client with a method by which to navigate stairs and other sudden elevation changes as they are walking. While some of the styling was tailored to the client's interests, this aspect can be changed to cater towards the general population of people who are legally blind to help them with this navigational challenge.

Requirements

Click here to see a full list of our requirements, as well as how well the competitors and each of the prototypes scored against the criteria.

Building Process

Phase 1: Plan 3 designs

In the first part of the process, 3 distinct designs were created with different aims. The first design focused on adapting the environment to the user's needs by setting a system of sensors in a room that could detect and alert a user of an elevation change. The second design was an attachment to a white cane that would use cameras to identify incoming elevation changes. Finally, the third design was a wearable system of sensors that be able to alert the user of obstacles.

Phase 2: Ad hoc testing

In the second part of the process, a series of ad hoc tests were conducted to determine the optimal design to pursue. The ad hoc tests involved comparing the accuracy of sensors to cameras to determine the best detecting system. Through this process, it was found that a camera paired with a machine-learning algorithm for identifying obstacles was better, encouraging us to use cameras. In addition, it was found that a wearable device was more dynamic in detection than a cane attachment, resulting in a combination of the second and third design in the initial prototype.

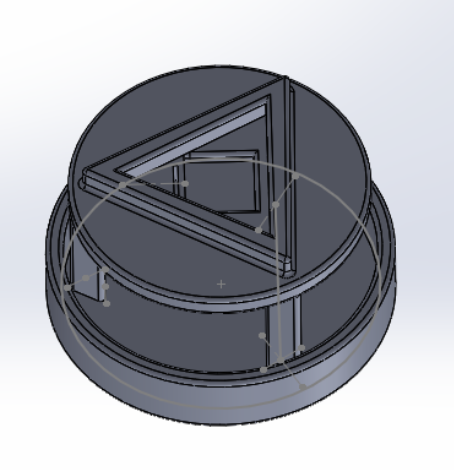

Phase 3: CAD Models

In this stage, the initial prototype was drawn in SolidWorks using Computer Aided Design (CAD) Modeling. This allowed for the model to be 3D printed with high accuracy.

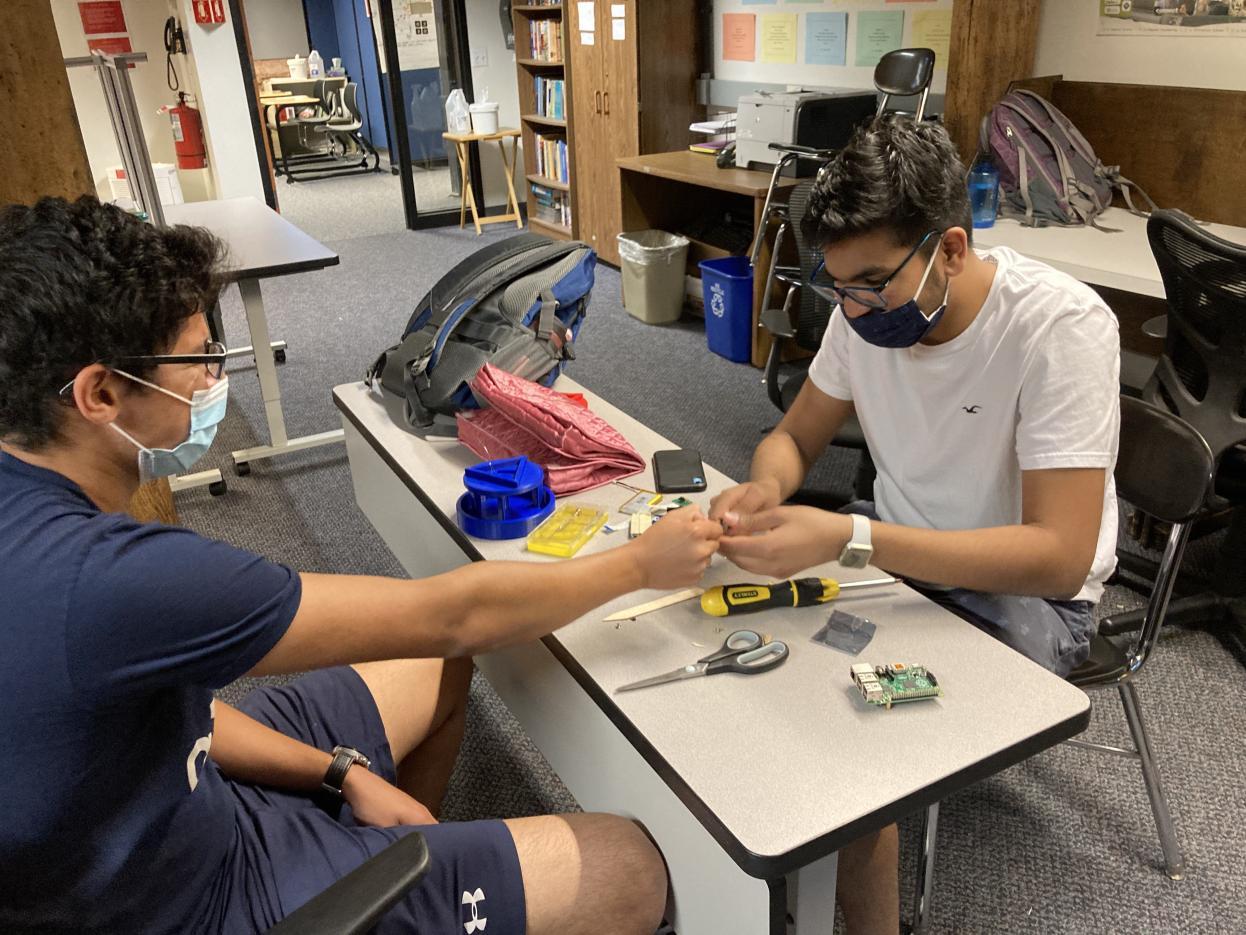

Phase 4: Circuitry

A series of circuits were built which connected the Raspberry Pi module to the camera as well as the Piezo buzzer, creating the connected between the detect and alert systems of the device.

Phase 5: Algorithm building

Because the design is camera based, a machine learning algorithm was created to parse camera information and identify elevation changes. The algorithm underwent extensive testing and refining, because it was a crucial component to the design.

Phase 6: Final Assembly and Testing

After the three components of the device were created, they were put together on a lanyard, producing a wearable device that could be tested. This involved testing the accuracy of the detection and alert system when walking towards stairs.

Usage

How To Use:

1. Turn the switch on

2. Put the lanyard around your neck, and position the device on your torso so that the triangle faces forward

3. Walk as you would normally

4. When the device makes a beeping noise, the user is near an elevation change. Walk more carefully to watch out for the obstacle.

Safety:

1. The device can only alert the user of nearby obstacles, therefore walk with caution

Care:

1. Protect from water because the device contains multiple electrical components

Improvements and Extensions

To improve upon this design, a few paths have been identified:

1. Improve upon the elevation algorithm. The model's accuracy in identifying down-hill elevation changes is significantly lower compared to the model's accuracy in identifying up-hill elevation changes. Therefore, the model must be retrained with a refined dataset to improve upon this second aspect of elevation changes.

2. Lower bulk on the design. The "arc-reactor" design portrudes from the torso at a much wider length than is typically found in neck piece, which can make the device feel awkward to use. In redesigning the piece, the length from the chest will be minimized.

Resources and References

Alexiou, G. (2021, March 24). Cutting Edge Intel AI-Powered Backpack Could Replace A Guide Dog For Blind People. Forbes. https://www.forbes.com/sites/gusalexiou/2021/03/2...

Bourne, R., Steinmetz, J. D., Flaxman, S., Briant, P. S., Taylor, H. R., Resnikoff, S., Casson, R. J., Abdoli, A., Abu-Gharbieh, E., Afshin, A., Ahmadieh, H., Akalu, Y., Alamneh, A. A., Alemayehu, W., Alfaar, A. S., Alipour, V., Anbesu, E. W., Androudi, S., Arabloo, J., … Vos, T. (2021). Trends in prevalence of blindness and distance and near vision impairment over 30 years: An analysis for the Global Burden of Disease Study. The Lancet Global Health, 9(2), e130–e143. https://doi.org/10.1016/S2214-109X(20)30425-3

Common eye disorders and diseases | cdc. (2020, June 4). https://www.cdc.gov/visionhealth/basics/ced/index...

Dabney, M. (2018, August 21). Aira at a Glance: Who We Are and What We Do. Medium. https://medium.com/aira-io/aira-at-a-glance-who-w...

Matchar, E. (2019, October 17). This Smart Cane Helps Blind People Navigate. Smithsonian Magazine. https://www.smithsonianmag.com/innovation/smart-c...

Mishra, A. (2021, November 2). Mishra, Arnav—Project Notes 2020-2021 [Online Text Editor]. Google Docs. https://docs.google.com/document/d/1fdChRMXmxVf08...

Nuevo Sentido Tecnológico Realidad Aumentada Sociedad Limitada. (2019, June 30). Lazzus (The mobility assistant for the blind and visually impaired). CORDIS. https://cordis.europa.eu/project/id/833513/report...

Panëels, S., Cooperstock, J., Blum, J., & Varenne, D. (2013, June 7). The Walking Straight Mobile Application: Helping the Visually Impaired Avoid Veering. https://smartech.gatech.edu/handle/1853/51516

Rizwan, I., & Neubert, J. (2020). Deep learning approaches to biomedical image segmentation. Informatics in Medicine Unlocked, 18, 100297. https://doi.org/10.1016/j.imu.2020.100297

Ying, J., Li, C., Wu, G., Li, J., Chen, W., & Yang, D. (2018). A deep learning approach to sensory navigation device for blind guidance. 2018 IEEE 20th International Conference on High Performance Computing and Communications; IEEE 16th International Conference on Smart City; IEEE 4th International Conference on Data Science and Systems (HPCC/SmartCity/DSS), 1195–1200. https://doi.org/10.1109/HPCC/SmartCity/DSS.2018.0...